We may hope that machines will eventually compete with men in all purely intellectual fields. But which are the best ones to start with? Even this is a difficult decision. Many people think that a very abstract activity, like the playing of chess, would be best. It can also be maintained that it is best to provide the machine with the best sense organs that money can buy, and then teach it to understand and speak English. This process could follow the normal teaching of a child. Things would be pointed out and named, etc. Again I do not know what the right answer is, but I think both approaches should be tried.

— Alan Turing, 1950

When Alan Turing penned these words over seven decades ago, he posed one of the central questions of artificial intelligence: how do we teach machines to think? In 2025, we’ve seen extensive work on both of the paths Turing considered; AI models that are taught to understand and speak English, and AI models that play chess. In reasoning agents, we find a combination of these approaches: language models that can speak human language and reason in it.

In this post, we’ll look at how Large Language Models (LLMs) are trained first to speak languages like English, and then to reason about things like playing chess. This is the third post in a series on reasoning. In my previous posts, I looked at how we measure reasoning in AI and explored techniques for eliciting reasoning from LLMs.

Types of LLM training

Modern LLMs are first trained to do next-token prediction. This foundational training method involves passing billions of texts through an LLM, one word (or subword) at a time, and training it to predict what word comes next. When the LLM predicts the wrong word of a given text, its parameters are corrected to make it more likely to predict that word correctly the next time around. This is what we mean by “training” - the modification of the parameters of a model like an LLM towards some goal. Next-token prediction training asks the model to parse all of the books, blog posts, and internet forums it can, not to understand their meaning initially, but to develop an idea of which words naturally follow others. When ChatGPT was trained on roughly 500 billion tokens from the internet, it wasn’t explicitly taught grammar rules or facts; it simply learned statistical patterns that govern how language flows.

Following next-token prediction pretraining on vast text corpora, modern LLMs are trained with supervised fine-tuning on curated examples, and now Reinforcement Learning (RL), either from human feedback to align with preferences, or – for reasoning models – specialized to focus on problem-solving capabilities. What’s remarkable is how rapidly this field has progressed, with each stage building upon and sometimes radically transforming the capabilities of the previous one. As discussed in the last post, much of this progress has come from taking ideas from various parts of AI and applying them to LLMs. Today, we’ll see how that is the case with Reinforcement Learning.

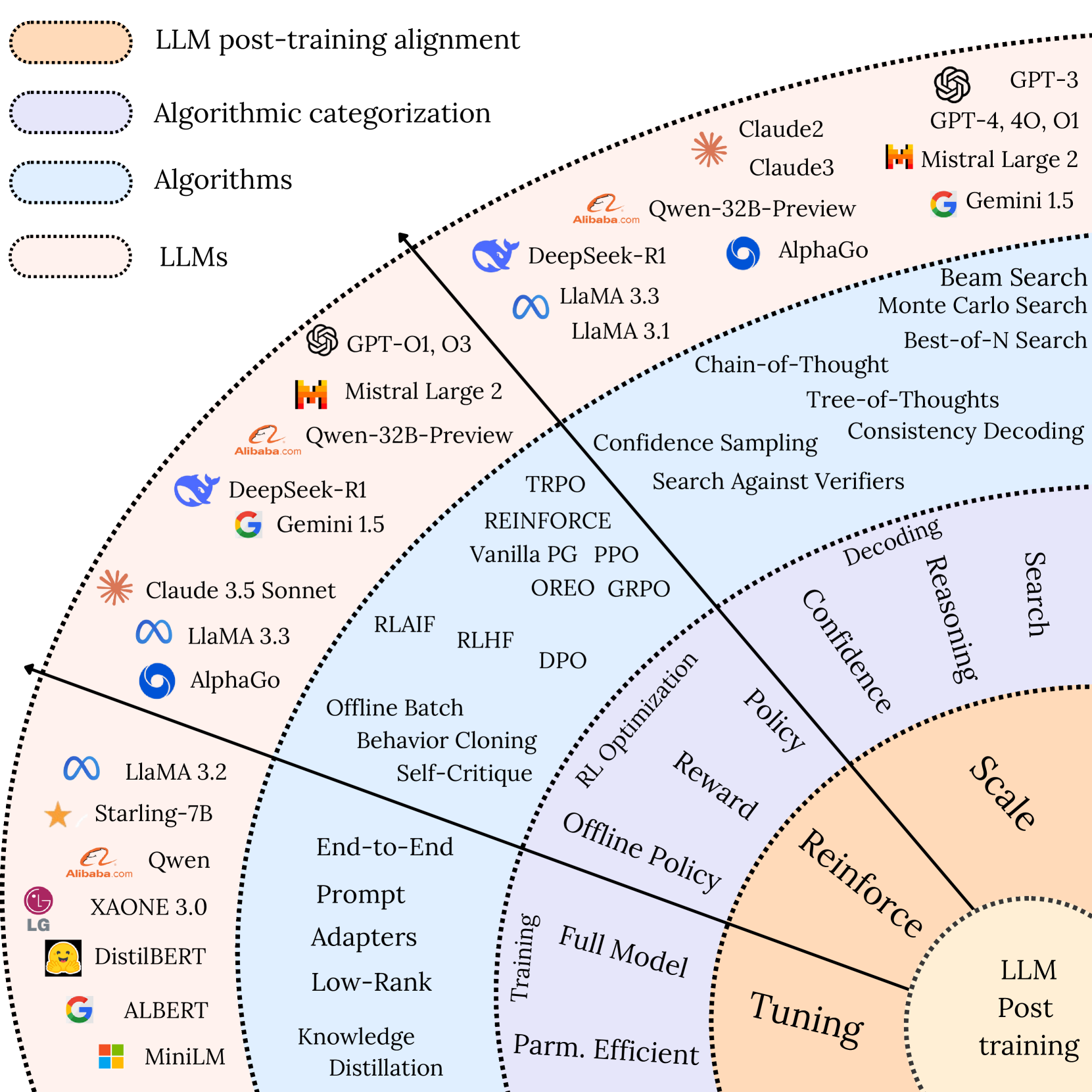

In a recent survey, these methods were categorized into fine-tuning, RL, and test-time scaling methods. We covered the test-time methods in the last post. The methods that are categorized in “fine-tuning methods” are generally ways to modify large models, and we’ll first cover how they can be used to fine-tune a model towards reasoning. The main focus currently, which DeepSeek’s r1 model exemplifies, is in the family of RL methods, which we’ll look at in greater detail.

Supervised Fine-Tuning: The First Step

Supervised Fine-Tuning (SFT) is the second step in the current training pipeline, following a pretraining on next-token prediction. SFT is essentially giving your LLM a crash course on how to behave in specific situations. You gather a curated dataset of high-quality examples - prompt and response pairs demonstrating the behavior you want - and train the model to respond in the same way as the example. Think of it as showing a bright but socially awkward intern thousands of examples of good customer service emails rather than trying to explain abstract concepts like “be helpful” or “show empathy.” The model learns by example, adjusting its parameters to make outputs that match your showcase responses more likely. This is one method used to make ChatGPT go from repeating random internet text to responding to user queries like an assistant.

For reasoning tasks, SFT is used to train models to output reasoning text, like the type elicited by Chain-of-Thought (CoT). I discussed this in my previous post, but, briefly, if you prompt a language model to explain step-by-step reasoning for a problem, often by giving an example in the prompt, it will generate text that replicates this step-by-step reasoning in the response. This “thinking out loud” improves results on question-answering tasks. SFT takes examples of previous prompt-response pairs with CoT reasoning in them and trains a model to respond like the example. However, SFT has some fundamental limitations for reasoning: it can only teach the model to imitate reasoning patterns it has seen during training, rather than developing genuine problem-solving abilities. It’s like teaching someone to follow a recipe perfectly without understanding the underlying cooking principles—fine for familiar scenarios but brittle when facing novel situations.

SFT also requires a huge number of examples for training; for reasoning tasks, that means huge datasets of LLMs that respond using Chain-of-Thoughts. This isn’t a type of data that exists plentifully on the internet - there is no social network where people write out, step-by-step, their verbal thinking on how to multiply 27 by 3 (but that still sounds more fun than Twitter). So, companies have started using knowledge distillation, where the outputs from a “teacher” model are used to train a “student” model. This is why DeepSeek’s allegedly “taking inspiration” from OpenAI’s models caused such a stir; it is possible that they used OpenAI’s model to generate the training data for their reasoning models. The trillion-dollar question: is learning from a competitor’s outputs fair use or IP theft? The lawyers are still duking that one out. However, the real advancement with DeepSeek wasn’t being able to distill OpenAI’s models (allegedly); it was the use of Reinforcement Learning to train DeepSeek r1 to reason even better.

Reinforcement Learning

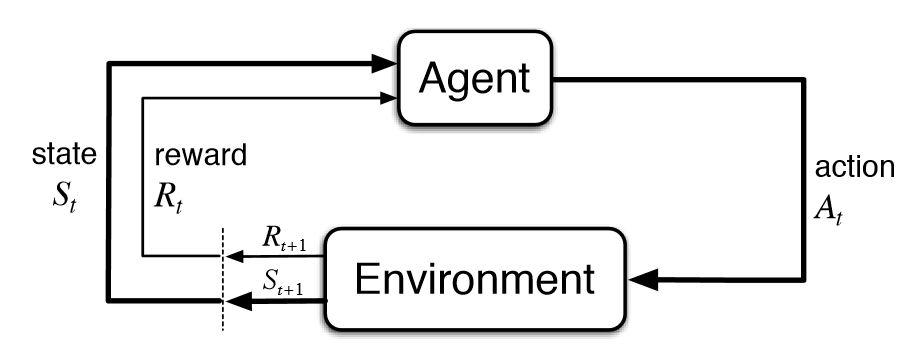

Reinforcement Learning (RL) is the final step in current reasoning pipeline, following next-token prediction training and supervised fine-tuning. RL fundamentally differs from supervised learning in one key aspect: there’s no dataset of correct answers. Instead, there’s a reward signal that indicates when an agent has done something desirable. This approach mirrors how we learn many complex skills in life – not through explicit instruction but through trial, error, and feedback. Just last month, Richard Sutton and Andrew Barto received the 2024 Turing Award (computing’s equivalent of the Nobel Prize) for their pioneering work in RL that dates back to the 1980s, long before the current AI boom. Their techniques have powered everything from AlphaGo’s defeat of world champion Lee Sedol to optimizing nuclear reactor operations. While supervised learning tells a system “the answer to this question is X,” reinforcement learning simply says “that attempt earned you Y points – figure out why and do better next time.” This distinction is crucial because it allows the optimization of systems towards goals rather than specific data. In the context of reasoning LLMs, RL enables models to discover novel reasoning based on getting reward for the final answer, even if the path to that answer isn’t clear before.

RL methods formulate problems as a sequence of actions taken from states. Think of a chess game-the state is the board at a given time, and an action is moving a piece, like advancing a pawn. Some actions will give rewards, like moving your Queen to put the adversary King in checkmate. RL methods try to determine what the best action to take at each step is in order to get the most reward.

Reinforcement Learning from Human Feedback (RLHF) was the first major application of RL to large language models, developed to align model outputs with human values and preferences. The approach, pioneered by Christiano et al. in 2017 and later popularized by OpenAI’s InstructGPT, follows a three-stage process: first, generate diverse model responses to prompts; second, collect human preferences about which responses are better; and third, train a reward model on these preferences that guides RL optimization of the language model. A later algorithm, Direct Preference Optimization (DPO) showed that pretrained language models already contain human preference information and can be used with reinforcement learning to tune the model’s outputs towards this inherit preference. RLHF and DPO share a common methodology: reward a model for responding as humans would prefer, and train the model using RL to maximize that reward. This process explains why chatbots respond politely, avoid harmful content, and follow instructions – they’ve been systematically rewarded for behaviors that human evaluators prefer.

While RLHF and DPO are very effective at making models helpful and harmless, they weren’t designed to enhance reasoning capabilities. The reward signals, based on subjective human judgments about response quality, don’t necessarily push models toward better problem-solving strategies or mathematical accuracy. Human raters might prefer responses that sound confident and well-structured even when they contain subtle logical errors, potentially reinforcing persuasive-sounding but flawed reasoning patterns. If ChatGPT unflinchingly agrees with everything you say, even when it is wrong, that’s because of RLHF training (or maybe your ideas really are just as invaluable, innovative, ingenious as ChatGPT says!)

Over the past year, there has been a shift in how reinforcement learning is applied to LLMs. Rather than focusing on alignment with human preferences, AI labs have begun using RL specifically to enhance reasoning capabilities. DeepSeek’s R1 model, for example, was trained not to be more helpful or harmless, but to solve complex problems more effectively. This pivot represents a fundamental change in priorities: from making AI systems that communicate pleasantly with humans to developing systems that can accurately respond to difficult questions. Turns out chatting with overly polite parrots isn’t super valuable, after all, if the parrots can’t do simple reasoning. And RL is well-suited for encouraging reasoning in LLMs, because there is usually a way to give reward for a correct answer. If we ask the LLM to multiply 27 by 3, something it is not well-suited for, we don’t need to know which tokens would be best to predict to get to the final answer. We can just reward the LLM if the final response is 81, and not reward it for anything else.

A major family of RL techniques used for LLM training are called policy gradient methods. Sutton and Barto - remember them? The Turing Award ones. They wrote a book on this stuff - describe policy gradient methods as seeking to “maximize performance” by adjusting a policy “based on the gradient of some scalar performance measure.” In plain terms, these methods help the model learn which actions lead to better outcomes by directly adjusting the probability of taking those actions. For example, the classic REINFORCE algorithm, from all the way back in 1992, works by increasing the likelihood of actions that led to good outcomes (high rewards) and decreasing the likelihood of actions that led to poor outcomes. As Sutton and Barto explain, “each increment is proportional to the product of a return and the gradient of the probability of taking the action actually taken divided by the probability of taking that action.” This makes intuitive sense, even for LLMs—if a certain reasoning path led to a correct answer, the model should be more likely to use similar reasoning in the future.

Plenty of modern algorithms improve on this concept, like the Trust Region Policy Optimization (TRPO) algorithm that constrains how much the model can change during each update, preventing it from overreacting to a single lucky (or unlucky) experience. A simpler and more efficient version, Proximal Policy Optimation (PPO) improved on TRPO by modifying the optimization method. Neither of these were developed to make chatbots reason better-they were initially demonstrated on tasks like controlling simple robots and playing video games. But they have been hugely successful in the application to LLMs. Most RLHF methods use PPO to align models with human preference, and it has started to be used for training reasoning models as well. DeepSeek, for example, used a modification of PPO called Group Relative Policy Optimization (GRPO), which makes the training process more efficient and stable by generating a group of outputs for the same prompt. By training models to arrive at the correct conclusion over a set of questions like AIME, RL methods can increase the reasoning ability of LLMs.

So, to train an LLM that talks pretty but can also reason, start with a pretrained LLM that regurgitates text from the internet. Then, tune that model to respond with step-by-step reasoning. Finally, reinforce correct reasoning with further tuning on a set of questions. DeepSeek’s approach illustrates this well: they first curated examples with clear reasoning structures, then applied their GRPO method which compares multiple solutions to the same problem. This creates a feedback loop where the model learns not only when it gets the right answer but also how it gets there. The model even internalized these patterns, learning when to backtrack from wrong paths or double-check its work - emergent behaviors that weren’t explicitly programmed. What’s interesting is how these training methods have unlocked capabilities that go beyond the model’s original next-token prediction design, creating systems that can genuinely puzzle through novel problems rather than simply recalling similar examples.

What happens next

The focus on reasoning LLMs has coincided with remarkable changes in the AI landscape. Open-source efforts are making access to these models and methods relatively cheap and easy, with DeepSeek’s R1 being followed by distilled versions that bring reasoning capabilities to smaller models running on consumer hardware. This knowledge transfer through distillation means that the reasoning abilities of massive frontier models can now be compressed into more efficient packages, allowing wider access to these capabilities. Just this past week, a scientific article entirely created, experiments included, by an LLM system was accepted through peer review. We don’t know yet what easy access to high-level reasoning models will result in, but the potential in education and science alone is intriguing.

As we look toward the future of reasoning in AI, Turing’s original vision seems closer than ever. He imagined machines competing with humans “in all purely intellectual fields,” and we’re now seeing models that can solve complex mathematical problems and generate sophisticated code. As we improve the capabilities of AI systems, though, we need to bring the focus back to their utility. Instead of aiming for machines that compete with humans, let’s make machines that cooperate with us.

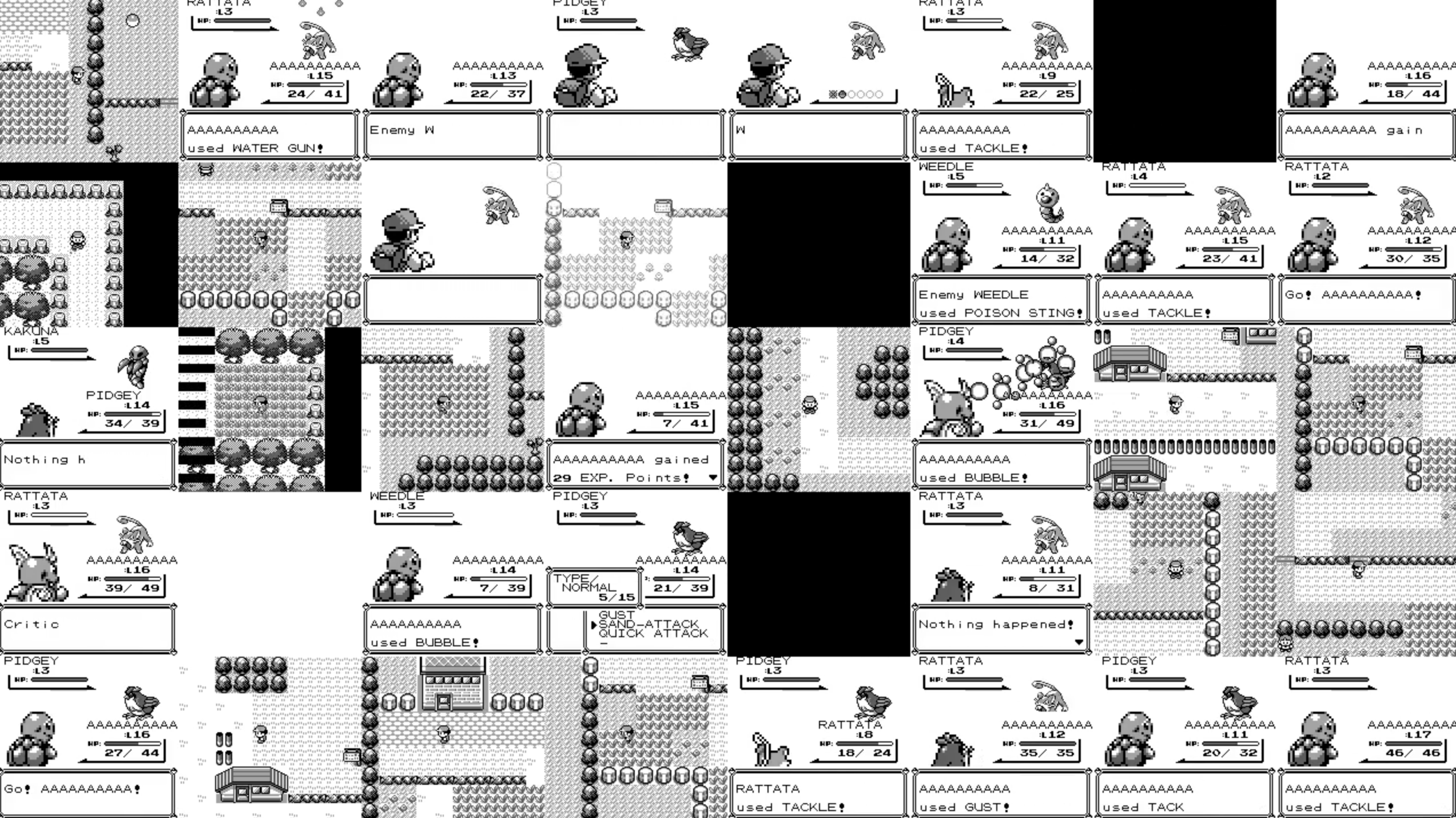

Consider again the example of Claude playing Pokémon on Twitch. The reasoning-trained LLM spent hours wandering in circles, unable to navigate Mt. Moon. But Pokémon Red has already been solved by AI, using a much smaller and more efficient model than an LLM. To be clear: Pokémon is just being used as a benchmark in both cases, a way to measure and show progress in AI. But one approach uses RL to train a LLM to reason generally to see if it can solve a task, while the other uses RL to solve the task.

This raises a fundamental question about the direction of AI development. Do we want general-purpose reasoning systems embedded within language models that attempt to do everything—from helping with scientific research to handling daily computing tasks to teaching—all within the same architecture? Or might we be better served by specialized systems working together, with reasoning capabilities distributed across purpose-built components? The singularity-driven vision of a superintelligent monolithic AI may drive headlines, but a more modest, modular approach could better serve human needs while avoiding the concentration of capabilities in systems we don’t fully understand.